Authors: Richard Hathaway, Rufei (Lilian) Wu, Ruidi (Rachel) Zhao, Qingyang (Jojo) Zhou

Executive Summary

This project attempts to predict weekly total room nights for hotel bookings up to four weeks into the future, using previous hotel bookings, air passenger traffic volume, air shopping data, holidays, and seasonality. Three different model types, Prophet, SARIMAX, and Random Forest were used to make these predictions. Random Forest model gave the lowest average MAPE and performed on average 53% better than a baseline model, although different model types performed better for different hotels. We found that the most important predictors vary for different hotels. Thus, a stepwise feature selection algorithm was implemented to find the best combination of predictors. A Python model pipeline was created to allow the selection of the best model for hotel predictions.

Introduction

Sabre is a software and technology company that partners with airlines, hoteliers, agencies, and other travel partners to retail, distribute and fulfill travel. The company is investing in a technology evolution to create a new marketplace for personalized travel in a cloud-based environment. Sabre is committed to helping customers operate more efficiently, increase revenue, and offer personalized traveler experiences by artificial intelligence, machine learning, and real-time data and analytics. This technology is used as the intelligence behind mobile apps, airport check-in kiosks, online travel sites, airline and hotel reservation networks, travel agent terminals, and scores of other travel solutions.

The ability to predict future hotel bookings has many benefits in the travel industry. Hotels can use predictions to aid pricing and revenue management strategies. However, there is not much work is done in this area. Previous work in predicting hotel bookings has mostly focused on data within the hotel industry.

This project seeks to incorporate data from the airline industry with the hotel industry data to augment the strength of these predictions. Furthermore, we strive to understand what factors affect fluctuations in hotel bookings and if they are consistent or different across different hotels.

We attempted to predict the weekly total room nights for a given hotel for up to four weeks into the future. The response variable of our models, room nights, is calculated as the length of stay of a reservation multiplied by the number of rooms booked for the reservation. The room nights of all reservations for a hotel for each week were summed, assigning reservations to the week in which the reservation began (arrival date).

The present work contributes to understanding of the inner workings of the dynamic and complex travel industry that connects people from all over the world.

Data Description

This project used one primary data source and three supplementary ones. The primary data source was hotel booking data, where each record in the data represents one hotel booking. Each record contains the following attributes booking date, arrival date, length of stay, number of rooms booked, advance purchase, and room nights (Length of stay * Number of rooms booked).

The hotel booking data contains data for 36 hotels across four different cities that span January 2019 – August 2021. Hotels contain anonymous labels in the form of ‘City 1 Hotel 6’. Some hotels are airport hotels, while others are in the city center, or on the outskirts of the city. However, the data are not labeled which hotels were of which type. The exact city names were not disclosed. Due to significant disruptions in travel due to COVID-19, analysis was limited to data only from 2018-12-31 to 2020-03-01.

Additionally, the following three datasets were used:

- Sabre’s air passenger traffic data, which contains the number of passengers that arrived by air travel to each city for each month, along with projections of air passenger traffic for future months.

- Sabre’s air shopping data, which contains the number of shops made in Sabre’s GDS for air travel to each city, where each row represents one combination of departure date and booking date. For example, the dataset contains the number of shops made on 2019-01-01 for a departure on 2019-03-01 to City 1, the number of shops made on 2019-01-02 for a departure on 2019-03-01 to City 1, and so on for all combinations of shop date and departure date.

- A list of US Federal holidays from 2011 to 2030, which was scraped from the U.S. Office of Personnel Management website [1].

Exploratory Data Analysis (EDA)

EDA was performed on all the available datasets at the city level to understand the existing patterns. There were several unexpected spikes in the hotel booking data that are addressed in the preprocessing steps as outliers. Strong seasonality is observed in the air passenger traffic data but is not clear in the air shopping data due to the limitation of the data range. Significant disruptions are observed in all datasets in around April 2020 due to the impact of COVID-19.

Hotel Booking Data

Figure 1 plots the total room nights by arrival week for each city. Around April 2020, the value of total room nights dropped significantly due to the impact of COVID-19. An unexpected spike in City 2 around August 2020 is observed, with three weeks having total room nights larger than 10,000. Investigation into the data reveals that there were 5 bookings in these three weeks that had total room nights ranging from 2,000 to 7,000. This is an example of influential outliers that was handled during the preprocessing stage.

Figure 1. Total room nights by arrival week for each city

Figure 1. Total room nights by arrival week for each city

To investigate whether monthly seasonality exists, total room nights by day of the month for each city was plotted. Figure 2 suggests that day of the month might be an influential predictor for total room nights due to fluctuations in values for each day. However, no obvious pattern of seasonality can be observed in the graph.

Figure 2. Total room nights by Day of Month for each city

Air Shopping Data

Similar to the total room nights, a sharp disruption of air shops was observed in around April 2020 due to the impact of COVID-19. However, it is unclear if any seasonality exists from the plot because the dataset only contains one year of data before the pandemic and thus does not have enough seasonal cycles to show the pattern.

Air Passenger Traffic Data

Figure 3 shows the monthly passenger air traffic for each city. Again, a sharp decline in air passenger traffic occurred in around April 2020 because of COVID-19. The air passenger traffic began to recover starting in February 2021, but it still did not reach the pre-pandemic level. Before April 2020, similar patterns of data repeated several times, which suggests that a strong seasonality exists.

Figure 3. Total air passenger traffic by month for each city

Figure 3. Total air passenger traffic by month for each city

Preprocessing

Following EDA, the preprocessing steps were performed to prepare the data for modeling and to eliminate extreme outliers that introduced noises in model learning process. The preprocessing steps were divided into four stages: weekly aggregation, data scope adjustment, outlier removal and imputation, and additional predictors generation. These four stages produced a well-prepared data frame that can be put into the models for training.

Weekly Aggregation

The original hotel booking data is booking-level data, where each record corresponds to one reservation at the hotel. In order to generate a time series that could be modeled, the hotel booking data was aggregated into weekly-level. For each hotel, all records in the dataset were grouped into the Monday’s date of the arrival week, and total room nights were summed for each Monday. We focused on weekly predictions because there was too much noise in the daily predictions for the model to capture a clear trend. Additionally, tuning models on daily data was too computationally expensive, and aggregating to the weekly level significantly reduced the runtime.

Data Scope Adjustment

While performing EDA, two problems were discovered in the hotel booking data. The first problem is that some hotels (such as City 2 Hotel 3, City 3 Hotel 2, and City 3 Hotel 6) have large gaps in record dates between bookings at the beginning of the time series. For example, the data for City 3 Hotel 2 begins with one booking on 2019-03-21 and then suddenly jumps to 2019-04-02. One potential explanation was that these nonconsecutive records might be test bookings automatically made by the computer when the hotel first partnered with Sabre. Therefore, to eliminate the impact of these abnormal test bookings, they were removed in the preprocessing stage.

The second problem is that there are sharp declines in values observed in several datasets, including hotel booking, air shopping, and air passenger traffic data, due to the impact of COVID-19. Because COVID-19 caused the data and even the future of the travel industry unpredictable, it would be very challenging for the models to capture a meaningful and stable trend that could be used for predictions. Thus, the project only focused on the data before 2020-03-02, which is an estimated date of when COVID-19 started. This scope limitation eliminated the unpredictable trends brought by COVID-19; however, future work could involve investigating if there were any predictable trends during and after the COVID-19 pandemic.

Outlier Removal and Imputation

Outlier Removal Methods

The significant outliers in the data were removed to eliminate noise that could mislead the models. Outliers might exist in the data due to unobservable events, such as conferences, local events near the hotel, or temporary closures of hotel rooms. Three methods were used to detect outliers: Low Pass Filter, Median Absolute Deviation, and Isolation Forest.

Low Pass Filter (LPF)

Low Pass Filter [2] removes outliers by calculating the centered rolling average of the time series data and then removing the outliers based on Z-score. It uses a fixed-size, centered window that slides over the time series data. Within each window, the data points (i.e. center of the window) that do not fall into the region bounded by the upper and lower bounds are considered outliers. For each rolling window, the lower bound and upper bound is calculated as the following:

- Lower bound:

- Upper bound:

where stands for the number of IQRs () away from the 1st/3rd quantile. This is a parameter that can be tuned.

Median Absolute Deviation (MAD)

Median Absolute Deviation [3] is a robust way to identify outliers, as it replaces standard deviation with median deviation and the mean with median in the common outlier removing method. Using the median can be beneficial to remove the effect that a large outlier could have on the outlier removal criteria. Similar to the LPF, it uses a fixed-size, centered window that slides over the data. Within each window, the data points (i.e. the center of the window) that are larger than the upper bound or smaller than the lower bound are considered outliers. For each rolling window that contains data, calculated the Rolling MAD first: Rolling median, Rolling Absolute Deviations, Rolling MAD. Then, the lower bound and upper bound were calculated as the following: Lower bound: and Upper bound:, where represents the number of MAD away from the rolling median, which is a parameter that can be tuned.

Isolation Forest

Isolation Forest is an unsupervised extension of the popular random forest algorithm. When an isolation forest is built, the algorithm splits each individual data point off from all other data points. The easier it is to isolate a single point from all other points, the more likely it is an outlier. This is because if a data point is not an outlier and it will be closely surrounded by other data points, and thus more difficult to isolate it. It is used as a black-box model that is directly taken from the scikit-learn package [4]. The parameter to tune is the maximum fraction of outliers detected, with a default of 0.01 in this project.

Outlier Removal Rule

The three outlier removal methods all have their own advantages and disadvantages. LPF and MAD are generally better at removing outliers compared to Isolation Forest, since they can detect local outliers using a sliding window. However, they are not able to detect outliers at the beginning or end of the time series data because of a centered window is used. For example, when using a window size of four weeks, the first two weeks and the last two weeks’ data do not have a window centered on them, thus they will never be detected as outliers. On the other hand, although Isolation Forest only detects global outliers, it takes care of all the data points. Therefore, the final outlier removal rule combines the advantages of these three methods:

- For the first half-window-size weeks and the last half-window-size weeks, Isolation forest was used to detect outliers.

- All the other weeks in the middle use LPF/MAD, which is a tunable parameter

For instance, when a four-week centered window is used, Isolation forest was used on the first 2 weeks and the last 2 weeks, while LPF/MAD were applied on all the remaining weeks in the middle (Figure 4).

Figure 4. Sample data from Jan 2019 to March 2020

Figure 4. Sample data from Jan 2019 to March 2020

Outlier Imputation Rule

After an outlier is removed, a newly calculated value needs to be imputed, depending on how the outlier is removed.

LPF/MAD

If the outlier is removed by LPF or MAD, then it is imputed as either the lower bound or upper bound generated by LPF or MAD, depending on the original value of the outlier. If the original value is lower than the lower bound, then the outlier is imputed with the lower bound; if the original value is higher than the upper bound, then the outlier is imputed with the upper bound.

Isolation Forest

If the outlier is removed by Isolation Forest, the new value is imputed as the mean value across all sample weeks in the entire time series.

Additional Predictors

Besides the historical hotel booking data, three more predictors were added to the model to further improve its performance: holiday week, air shops, and air passenger traffic.

Holiday Week

This is a binary variable that indicates whether a week is a holiday week or not. A week is a holiday week if it meets one of the following three conditions:

- Contains one or more federal holidays

- Previous week’s Saturday or Sunday is a federal holiday

- Next week’s Monday is a federal holiday.

This strategy allows the models to take into account travelers who travel over a long weekend and arrive during the week before the holiday (for a Monday holiday) or depart during the week after the holiday (for a Saturday/Sunday holiday). The list of federal holidays was obtained from the United States Federal Government Office of Personnel Management’s website [1]. The federal holiday data from 2011 to 2030 was scraped. In each year on average, around ⅓ of the weeks are marked as holiday weeks, because most holidays are on Mondays. We did not consider other holidays, such as Black Friday, because the effect of these special days might have been reflected in the air shopping data.

Air Shops

A serious problem with using air shopping data for time series forecasting is that at the time of prediction, the number of shops will be incomplete. This is because between the time of prediction and the future arrival date that the model is trying to predict, more shops will be made. Unlike the air passenger traffic data that Sabre can project, air shops are difficult to forecast. Thus, to solve this issue and to ensure that no unobtainable data is used in the model, all shops records with advance purchase (AP) less than or equal to the prediction horizon are dropped. For example, when using a prediction horizon of four weeks, all shops with AP less than or equal to 28 days will be dropped.

Finally, because the raw air shopping data is at daily-level, we performed weekly aggregation by departure date to get the weekly total air shops.

Air Passenger Traffic

Air passenger traffic dataset contains the number of passengers arriving in each city each month, with a few future predictions provided beyond the current dates. To resolve the conflict between monthly data and weekly prediction, the air passenger traffic value for each week is calculated as the following:

- Situation 1: If an entire week is in the same month, we used the air passenger traffic data for that month. For example, for the week 03/23/2020 – 03/29/2020, the monthly air passenger traffic in March 2020 is used.

- Situation 2: If a week spans two months, we used the weighted average of the air passenger traffic values from the two months. For example, for the week 03/30/2020 – 04/05/2020, the monthly air passenger traffic value for this week is: 2/7 * monthly air passenger traffic in March 2020 + 5/7 * monthly air passenger traffic in April 2020.

Models

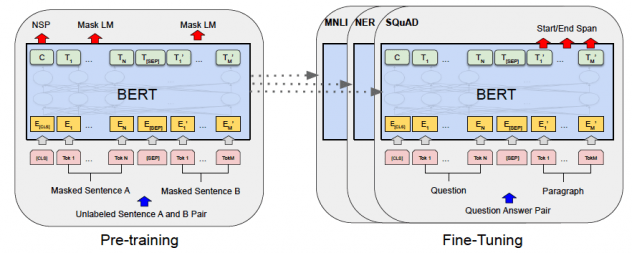

Pipeline Workflow

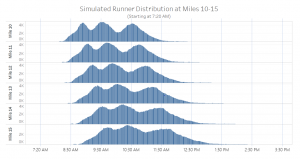

Since hotels differ in booking volumes, best model type, and best predictor combinations, a model pipeline (Figure 5) is implemented for each hotel separately. The entire pipeline consists of two stages: training and prediction.

There are three main use cases of the pipeline – “train-only”, “train+predict”, and “predict-only”. Before any predictions on a hotel with a model type, users need to train at least once on the same hotel data using the same model type to obtain a hyperparameter json file. To do that, users could either “train-only” or “train+predict”, which would automatically predict the following horizon after the test period. Finally, users also have the options to “predict only” if they have a saved json file from before and want to apply it to the new samples.

Training

During the training stage, the model pipeline begins with taking user inputs, which specify the path to hotel input data, the model type (one or all), the error metric to use, the prediction horizon, and the external variables to use. The next step is data preprocessing, including weekly aggregation on total room nights, outlier removal and imputation, splitting the data into training and testing datasets, and generating external variables (holiday, passenger traffic, and air shops; seasonality does not have to be generated). Then, the pipeline enters the model training stage. For each model type specified, the program begins with hyperparameter tuning on the full model to obtain the best hyperparameters combination. Using the selected parameters, it then performs the backward stepwise feature selection process, which returns the best predictors combination with the lowest test error metric. The model object returned from the feature selection will be saved in a json file for future reference and prediction. Finally, the pipeline decides the best model type out of all that are executed, by comparing the test error metric.

Prediction

Users can also do only the prediction on a hotel, if they have a saved model json file from previous training attempts and want to apply it to the new data on the same hotel with the same model type.

Figure 5. Model pipeline

Input Data Requirements

The input of the pipeline should include the following data files:

- historical hotel booking data

- historical air shopping data

- historical and predicted values of passenger traffic data

- holiday data (same for all hotels).

Three different model types, Prophet, SARIMAX, and Random Forest were used to make the predictions.

Prophet

Prophet is an open-sourced software developed by Facebook’s Core Data Science team for large-scale, automated time series forecasting [5, 6]. Since it is designed for business use cases, it is more intuitive and flexible than most other traditional models. It does not require much effort in data preprocessing, because it is robust to outliers, missing data, and dramatic changes in the historical trends. It provides options for tuning human-interpretable parameters based on business knowledge. It also allows manually adding changepoints (i.e. sudden changes in the trends), which is especially useful if there are known business changes that might affect the trends, so that analysts could manually incorporate those changes and help fit the model.

Prophet is an additive model that decomposes a time series into three components: trend, seasonality, and holidays. Trend function g(t) estimates the non-periodic changes in the series. Seasonality s(t) represents any periodic changes, which could be daily, weekly, yearly, or any custom periods. Holiday h(t) represents the effects of holidays that happen irregularly.

y(t) = g(t) + s(t) + h(t) + error

SARIMAX

SARIMAX (Seasonal Auto-Regressive Integrated Moving Average with Exogenous variables) is a traditional statistical time series model built on the ARIMA model. The model utilizes values of the target variable at previous time steps (auto-regressive terms) and the errors at previous time steps (moving average terms) to forecast future values. If the time series is not stationary with respect to the mean, the model eliminates this shift by using the difference between consecutive time steps to fit the model. The SARIMAX model adds seasonal auto-regressive and seasonal moving-average terms, where the model uses the values and errors of the target at past time steps that are multiples of the seasonal period, s. For example, if s = 12, P = 2, and Q = 2, the SARIMAX model would analyze the time series at 12-time steps prior and 24-time steps prior. The SARIMAX model also allows for seasonal differencing if the seasonal pattern of the time series is not stationary. Finally, exogenous variables are added to the model as additional regressors. The SARIMAX model can be expressed as (p, d, q), (P, D, Q, s), where the first set of hyperparameters is the non-seasonal order, and the second set of hyperparameters is the seasonal order.

Random Forest

Random forest is a popular ensemble method widely used for regression and classification tasks by bagging multiple decision trees during the training stage. The prediction results for the regression problem are averaged over all the decision trees to enhance accuracy and avoid overfitting. This project used the RandomForestRegressor function in the scikit-learn python library [12].

Random forest can be used for time series forecasting through transforming the original time series dataset into a format suitable for a supervised learning problem. In particular, the time series data needs to be restructured using a sliding-window representation. Lagged values (i.e. values from previous time steps) are considered as input variables. Additional external variables such as seasonality (i.e. month of the year) and holiday can also be added as features. Table 2 shows a sample data frame that is in the desired format for the Random Forest model.

Table 1: Sample Data for Random Forest

| Total_RoomNights | Lag1 | Lag2 | Lag3 | Lag4 | Seasonality |

| 459 | 256 | 317 | 287 | 345 | 01 |

| 424 | 459 | 256 | 317 | 287 | 02 |

Model Comparison

As it is presented in Table 2, each model type has clear advantages and disadvantages.

Table 2: Model comparison

|

|

Prophet |

SARIMAX |

Random Forest |

|

Avg. MAPE (step. feature selection) |

25% |

22% |

12.2% |

|

Run Time per hotel |

2 minutes (50 random search iterations |

8 minutes (grid search – 256 iterations) 1 minute (auto-arima) |

4 minutes (50 random search iterations) |

|

Initial Required Training Data |

12 weeks |

20 weeks |

4 weeks |

|

Unique Features |

Options to tune the weight of predictors based on business knowledge |

Traditional regression-style output and statistics such as p-values |

Feature importance measures for model interpretability. |

|

Challenges |

More than 9 parameters to tune and could take over 30 hours to try all combinations |

Can be challenging to manually select the model parameters |

3 weeks of initial training data is lost due to the need to create lagged variables. |

Prophet offers many options to tune hyperparameters and the weights of variables, but trying all parameter combinations can be very slow. SARIMAX offers the fastest training times and interpretable output summary tables with helpful regression-style outputs. However, it is difficult to manually select the model hyperparameters, even with pre-existing business knowledge about the data. Finally, Random Forest offers a feature importance measure, which helps users understand what the most important factors in the model are. However, one minor drawback of using a traditional machine learning model is that a few weeks of initial training data are lost in feature engineering to create lagged variables.

Each model type also has different requirements for the length of the initial training data. Prophet does not have a formal number of weeks needed, but it is advisable to have enough initial training data so that the model can begin to learn the pattern in the data before cross-validation begins. In this project, 12 weeks of initial training data were used for Prophet. The required initial training period for SARIMAX is determined by the range of hyperparameters that are being tested, because trying to test additional lags, seasonal lags, or seasonal differencing operations requires increasing the size of the training sample so that the model can learn the time series. Given the range of hyperparameters tested for SARIMAX in this project, at least 20 weeks of initial training data were required. On the other hand, a machine learning model, such as random forest requires only enough training to satisfy the lags used as predictors, which in this project, is four weeks. We used the TimeSeriesSplit class from scikit-learn package [13] to set up the walk forward cross-validation for Random Forest.

Compared to SARIMAX, Prophet sacrifices some inferential benefits for flexibility and interpretability. Prophet discovers the optimal fit on the historical data to use for prediction but does not analyze the relationship between time steps in a time series, as opposed to SARIMAX, which assumes correlations between time steps. This assumption means that Prophet cannot capture unstable trends as well as other models do. In addition, Prophet would work much better with time series with strong seasonality and several seasons of historical data [6].

Cross Validation

To select the best model hyperparameters, we implemented walk forward cross-validation. The initial dataset was split into a training dataset that began with the week of 12/31/2018 and ended with the week of 12/30/2019. Our test dataset consisted of data from the week of 1/6/2020 that ended with the week of 2/24/2020.

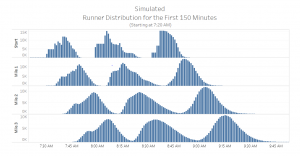

We implemented walk forward cross-validation on the training set. This involved first fitting the model on the first few weeks of training data (number of weeks determined by model requirement) and then making predictions on the next several weeks of data (number of weeks determined by horizon). For example, a model is first trained on weeks 1-12 and cross-validated on weeks 13-16. Then, we “walk-forward” four weeks, training the model on weeks 1-16 and cross-validating weeks 17-20. We continued this process until the four weeks to cross-validate are the last four weeks in our training data. For each walk forward step, we calculated the cross-validation error metrics, which are then averaged across steps as the final cross-validation metric. Walk-forward cross validation [14] was implemented as it is presented in Figure 6.

Figure 6. Implementation of walk-forward cross validation

Backward Stepwise External Variable Selection

We hypothesized that the importance of different external variables might differ between hotels. Therefore, we implemented an algorithm to dynamically select the best combination of these external variables. However, to reduce the runtime, we applied the variable selection after the best model hyperparameters were chosen in cross-validation using all the external variables specified by the user (the full model). Once the hyperparameters had been selected and the full model had been fit, we tried dropping each external variable in the model to determine if dropping any variable improved the model, and if so which one. If the full model performed better than all of the partial models with one variable dropped, we stopped the algorithm. On the other hand, if any of the partial models performed better than the full model, we set the best partial model as the new full model and repeated the process of dropping each variable.

For example, take a model fit in cross-validation using the variables holiday, air passenger traffic, and air shops. Three partial models are tested, one where a different variable is dropped from the full model, as it is presented in Table 3:

Table 3: Variable selection

| Model | Variables In the Model | MAPE |

| Full Model | Holiday, Air Passenger, Air Shops | 0.26 |

| Partial Model (Drop Holiday) | Air Passenger, Air Shops | 0.31 |

| Partial Model (Drop Air Passenger) | Holiday, Air Shops | 0.21 |

| Partial Model (Drop Air Shops) | Air Passenger Traffic, Holiday | 0.27 |

The partial model that dropped air passenger traffic had a lower MAPE than the full model, so this partial model becomes the new full model.

Table 4: Selection of best model based on variable selection

| Model | Variables In the Model | MAPE |

| Full Model | Holiday, Air Shops | 0.21 |

| Partial Model (Drop Holiday) | Air Shops | 0.22 |

| Partial Model (Drop Air Shops) | Holiday | 0.23 |

In the next iteration, there is no partial model that outperforms the full model with holiday and air shops in the model, so this model is selected as the best model (Table 4)

This stepwise algorithm relies on one main assumption. The algorithm stops when there is no partial model with one less variable that outperforms the full model. Therefore, it assumes that there are no combinations of even fewer variables that would outperform the full model as well. Thus, it is not guaranteed that the best model combination will be found. However, performing feature selection in this way could reduce the runtime, especially when a user want to consider additional external variables.

Key Findings

We performed preliminary analysis on the prediction results on a sample of eight hotels by running them through our pipeline. Two hotels were selected from each city – one of a higher volume and the other of a lower volume – to investigate the relationship between hotel specific characteristics and predictability. We compared the MAPE of the best models for each hotel (i.e., models with the best hyperparameter and predictor combinations), using a horizon of four weeks. The prediction period was the week of 2020-01-06 (inclusive) to the week of 2020-03-02 (exclusive), and the training period was the week of 2018-12-31 to the week of 2019-12-30. For SARIMAX, we used the results from grid search (and not auto-arima) to ensure consistency in selection metrics between model types, because auto-arima is tuned using AIC instead of MAPE.

In this project, the baseline model used for comparison was simply using the weekly total room nights at the time of prediction as the prediction result. For example, with a horizon of four weeks, the predicted total room nights of the week of 2021-02-22 would be the actual total room nights of the week of 2021-01-25.

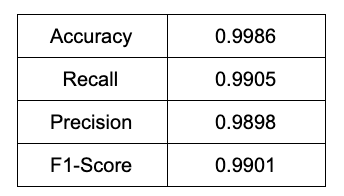

Overall Performance

Table 5 demonstrates the MAPE scores of the baseline model and our models for each hotel. The percentage difference between the best model’s MAPE and the baseline model’s MAPE is calculated so that the more negative the value is, the better the best models are compared to corresponding baseline. In all eight hotels, our best models were at least 40% better than the baseline. On average, they had a MAPE score of 0.12, which was 53.4% better than the baseline predictions.

We also observed that except for City 3, low-volume (yellow) hotels on average had higher MAPE than their high-volume (green) counterparts in the same city.

Table 5. MAPE of Baseline and the Best Performing Model for Each Hotel

| Baseline | Our model | Percentage Difference | |

| City 1 Hotel 6 | 0.32 | 0.16 | -51.1% |

| City 1 Hotel 9 | 0.20 | 0.06 | -70.1% |

| City 2 Hotel 7 | 0.23 | 0.11 | -51.4% |

| City 2 Hotel 1 | 0.14 | 0.06 | -54.9% |

| City 3 Hotel 4 | 0.33 | 0.10 | -68.9% |

| City 3 Hotel 1 | 0.24 | 0.14 | -43.4% |

| City 4 Hotel 8 | 0.35 | 0.20 | -42.3% |

| City 4 Hotel 5 | 0.24 | 0.13 | -47.2% |

| Average | 0.26 | 0.12 | -53.7% |

* Percentage Difference calculated as (best model MAPE – baseline MAPE)/baseline MAPE

Comparison between Model Types

Table 6 illustrates the percentage differences between each model type and the baseline model in each hotel. The bolded values correspond to the model type with the lowest percentage difference and thus highest relative performance to the baseline. In this sample, Random Forest outperformed the other two model types in 7 out of the 8 hotels. On average, it was 53% better than baseline, around 46% better than Prophet, and around 30% better than SARIMAX

Even in the hotel where SARIMAX and Prophet failed to beat the baseline (i.e., City 4 Hotel 8), Random Forest still performed 47% better than the baseline. For this reason, Random Forest is used as the default model type in our package.

Table 6. Percentage Differences of MAPE from Baseline

| C 1 H 6 | C 1 H 9 | C 2 H 7 | C 2 H 1 | C 3 H 4 | C 3 H 1 | C 4 H 8 | C 4 H 5 | Average | |

| Baseline |

0.32 |

0.20 |

0.14 |

0.24 |

0.24 |

0.34 |

0.24 |

0.35 |

0.26 |

| Prophet | 2% | -29% | -25% | -29% | 10% | -36% | 46% | 7% | -7% |

| SARIMAX | -36% | -19% | -51% | -31% | -46% | -36% | 104% | -46% | -20% |

| RF | -51% | -70% | -48% | -55% | -69% | -43% | -42% | -47% | -53% |

* Percentage Difference calculated as (MAPE of each model type – baseline MAPE) / baseline MAPE

There are several hypotheses as to why Random Forest performed better. First, due to the limitations in our sample time scope, time series models (i.e., SARIMAX and Prophet) might lose their advantages in detecting trends. Since there is only slightly more than one-year of data in our training dataset, there are not enough cycles that allowed the models to capture yearly seasonal trends. Especially for Prophet, which relies heavily on seasonal patterns, the models might not have enough information to make inferences based on seasonal patterns. Similarly, historical trends might not be obvious with limited training data, which might mislead the time series models to make inaccurate forecasts. These problems are not an issue for Random Forest, because it does not rely on historical trends when making predictions. If these hypotheses are true, the issue could be mediated by including more years of data into the training sample.

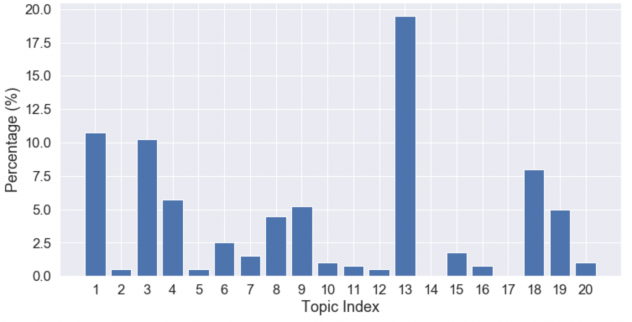

Comparison between Predictors

Table 7 shows the average rank of feature importance across 8 hotels, using the feature importance measure returned by Random Forest. A higher rank means the predictor is more influential relative to the other predictors. On average, historical hotel bookings in the past two weeks were important predictors, which are within expectations. To our surprise, air passenger traffic and air shops were also high in rank, even though air passenger traffic is monthly data and air shops are incomplete by the time of predictions. This observation hints at the relationship between hotel demand and its attraction to air travelers, such as its distance to the airport, distance to downtown, and conference room rental availability etc.

Table 7. Average Rank of Feature Importance Generated by Random Forest Across 8 Hotels

| Rank | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| Variable | Lag 1 | Passenger traffic | Lag 2 | shops | seasonality | Lag 4 | Lag 3 | Is Holiday week |

Is Not holiday week |

It was also observed that whether a week is a holiday week was the least important predictor among all. There are two potential hypotheses as to why this might be the case. First, it is possible that our definition of holiday week was too general and included weeks that might not affect hotel bookings, which introduced noise and misled the models. As discussed, we defined a normal week as a holiday week if the holiday fell at the beginning or end of the week, to consider travels over long weekends. This definition is more reasonable for major holidays like Christmas, when people travel days before the actual holiday. However, for holidays where travel is less common, such as Columbus Day or Veterans Day, this assumption might not be appropriate. Another possible reason is that not all the hotels analyzed in this project are in major tourist destinations or are near airports, so these hotels may not receive large numbers of holiday bookings.

Additionally, even though the predictors have different relative importance, we observed that the best predictor combinations varied between hotels, and there was not one predictor that was important to all hotels. Table 8 illustrates the average MAPE across three model types of models with different predictor combinations. The bolded values correspond to the best predictor combination for each hotel. As observed, there was not a predictor combination, or even a single predictor, that consistently returned the lowest error. Similarly, simply including all predictors in the model could introduce noise and result in large errors. Thus, this observation supported our decision that the model pipeline should dynamically find the best predictors with the stepwise feature selection algorithm.

Table 8. Average MAPE across Three Model Types of Models with Different Predictors Combinations

| Predictor Combinations | C 1 H 6 | C 1 H 9 | C 2 H 7 | C 2 H 1 | C 3 H 4 | C 3 H 1 | C 4 H 8 | C 4 H 5 |

| Hotel Booking History (History) | 0.31 | 0.38 | 0.13 | 0.15 | 0.26 | 0.36 | 0.37 | 0.19 |

| History, Seasonality | 0.34 | 0.22 | 0.14 | 0.16 | 0.22 | 0.37 | 0.44 | 0.33 |

| History, Seasonality, Holiday | 0.31 | 0.27 | 0.15 | 0.11 | 0.20 | 0.27 | 0.42 | 0.31 |

| History, Seasonality, Passenger | 0.27 | 0.13 | 0.15 | 0.12 | 0.14 | 0.17 | 0.34 | 0.22 |

| History, Seasonality, Holiday, Passenger | 0.26 | 0.14 | 0.15 | 0.13 | 0.15 | 0.17 | 0.39 | 0.24 |

| History, Seasonality, Holiday, Shops | 0.27 | 0.18 | 0.15 | 0.19 | 0.18 | 0.21 | 0.56 | 0.22 |

| All Predictors | 0.28 | 0.15 | 0.15 | 0.10 | 0.14 | 0.18 | 0.54 | 0.18 |

| Difference between Best and Worst Combination | 8ppt | 26ppt | 2ppt | 10ppt | 11ppt | 20ppt | 22ppt | 15ppt |

* In the last row, ppt refers to “Percentage Points,” calculated as (max MAPE – min MAPE) for each hotel. E.g., for City 1 Hotel 6, it is 0.34 – 0.26 = 0.08ppt

Comparison between Low-Volume and High-Volume Hotels

Finally, we compared the model performances on hotels with different average booking volume. We selected two hotels from each city with varying average total room nights in the sample period, as presented in Table 9.

Table 9. Average Weekly Total Room-Nights from 2018-12-31 to 2020-03-01

| Low Volume | High Volume |

| City 1 Hotel 6 | City 1 Hotel 9 |

| 560 | 950 |

| City 2 Hotel 7 | City 2 Hotel 1 |

| 620 | 2220 |

| City 3 Hotel 4 | City 3 Hotel 1 |

| 1220 | 3320 |

| City 4 Hotel 8 | City 4 Hotel 5 |

| 190 | 1250 |

As it is presented in Table 10, the MAPE’s of the two types of hotels in each city and the percentage difference between them. The percentage difference was calculated so that the more positive the value is, the better the model performance is for high-volume hotels compared to its low-volume counterparts. In all cities, the average MAPE across three model types of high-volume hotels were at least 30% and on average 67.8% better than low-volume hotels. The pattern was consistent in each single mode type, except for Random Forest in City 3. We are unsure about the reason for this exception and would need more information of each city to form a hypothesis.

There are some hypotheses why high-volume hotels were more predictable. Higher-volume hotels are likely more recognizable hotels that have more stable demand and historical booking trends that models could capture. On the other hand, larger hotels might have specific characteristics that helps with predictability. For example, high-volume hotels might be located near downtown or tourist sites and attract more air travelers during holidays, whose effects on bookings could be captured by the holiday and air passenger predictors in the model. The source of guests in smaller hotels might be less obvious (e.g., non-air travelers) and thus less predictable.

Table 10. Comparison of MAPE for Low- and High-Volume Hotels in Each City

| Model | Low volume | High volume | Perc Diff | |

| City 1 | Prophet | 0.33 | 0.14 | 80.3% |

| SARIMAX | 0.21 | 0.16 | 26.0% | |

| RF | 0.16 | 0.06 | 90.7% | |

| Average | 0.23 | 0.12 | 63.4% | |

| City 2 | Prophet | 0.18 | 0.10 | 55.9% |

| SARIMAX | 0.11 | 0.10 | 15.3% | |

| RF | 0.12 | 0.06 | 64.5% | |

| Average | 0.14 | 0.09 | 45.6% | |

| City 3 | Prophet | 0.37 | 0.10 | 114.5% |

| SARIMAX | 0.18 | 0.16 | 14.8% | |

| RF | 0.10 | 0.14 | -28.1% | |

| Average | 0.22 | 0.15 | 36.4% | |

| City 4 | Prophet | 0.37 | 0.25 | 36.3% |

| SARIMAX | 0.72 | 0.13 | 139.4% | |

| RF | 0.20 | 0.13 | 47.6% | |

| Average | 0.48 | 0.17 | 95.6% |

* Percentage Difference calculated as (low-volume MAPE – high-volume MAPE) / AVG(low MAPE, high MAPE)

Conclusions and Next Steps

In this project, we successfully built machine learning models for hotel demand predictions using previous hotel bookings, air passenger traffic volume, air shopping data, holidays, and seasonality. Our models show significant improvement over the baseline. For the sample of eight hotels, we analyzed in-depth, Random Forest beat the baseline model by 53% on average and had the best performance in seven hotels. In addition, recent historical hotel bookings, air passenger traffic, and air shops were the three most important predictors among all. However, there was no external variable combination that worked well for all hotels, and thus a stepwise algorithm was implemented to select the best external variables. Finally, two hotels of different weekly average bookings were compared, and models for high-volume hotels performed on average 67.8% better than models for low-volume hotels in the same cities.

The findings of this project suggest many potential avenues of further research. First, including additional data sources such as city-specific features regarding city type (tourist city or not) and local events (e.g., conferences, sports events, etc.) would be helpful in better understanding and predicting the hotel demand. In addition, although the given models were built using only pre-covid data, it is worth exploring more recent post-covid data to obtain a more comprehensive picture of the hotel demand. Future work should apply other supervised machine learning models as well as deep learning models which have proven to work well on time series data. For instance, RNN is a powerful deep learning model with a large network architecture that aims to handle sequence dependence. Finally, given that the best model type varies across different hotels, it could be valuable to create clusters and see if there are similar characteristics among hotels that perform the best with a certain set of external variables.

References

[1] Office of Personnel Management, 2022. “Policy, Pay, and Oversight, Pay and Leave”. United States Federal Government. https://www.opm.gov/policy-data-oversight/pay-leave/federal-holidays/#url=2022

[2] Kirsten Perry, 2019. “Unsupervised Machine Learning Approaches for Outlier Detection in Time Series, using Python”. Towards Data Science. https://medium.com/towards-data-science/unsupervised-machine-learning-approaches-for-outlier-detection-in-time-series-using-python-5759c6394e19

[3] A. Tayip Sakka, 2019. “Median Absolute Deviation tutorial from Medium”. Towards Data Science.

https://towardsdatascience.com/practical-guide-to-outlier-detection-methods-6b9f947a161e

[4] Documentation for the scikit-learn Isolation Forest function:

https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.IsolationForest.html

[5] Documentation for Prophet Package

https://facebook.github.io/prophet/

[6] Sean J. Taylor and Benjamin Letham, 2017. Forecasting at scale. PeerJ Preprints. https://doi.org/10.7287/peerj.preprints.3190v2

[7] Documentation for Prophet Hyperparameter Tuning

https://facebook.github.io/prophet/docs/diagnostics.html#hyperparameter-tuning

[8] Documentation for Stan Package

[9] Documentation for Multiprocessing Package

https://docs.python.org/3/library/multiprocessing.html

[10] Documentation for the statsmodels SARIMAX function: https://www.statsmodels.org/dev/generated/statsmodels.tsa.statespace.sarimax.SARIMAX.html

[11] Documentation for the pmdarima auto_arima function: https://alkaline-ml.com/pmdarima/modules/generated/pmdarima.arima.auto_arima.html

[12] Documentation for the Random Forest model:

https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestRegressor.html

[13] Documentation for the scikit-learn TimeSeriesSplit function: https://scikit-learn.org/stable/modules/generated/sklearn.model_selection.TimeSeriesSplit.html

[14] Audhi Aprillant, 2021. “Walk-Forward Optimization — Cross-Validation Technique for Time-Series”. Medium. https://audhiaprilliant.medium.com/walk-forward-optimization-cross-validation-technique-for-time-series-data-61739f58f2c0