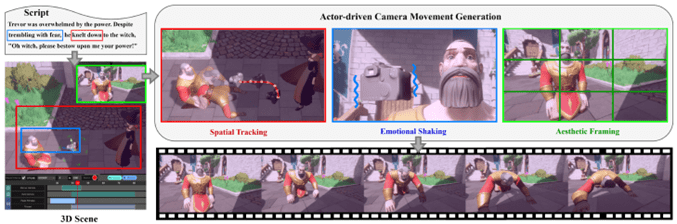

Immersion plays a vital role in the viewer experience of cinematic creation. In traditional cinematography, the sense of immersion is created by manually synchronizing the actor and the camera, which is a labor-intensive process. In this work, we analyze three aspects of cinematographic immersion following the actor’s spatial motion, emotional expression, and aesthetic on-frame location. These components are combined into a high-level evaluation mechanism so as to guide the subsequent auto-cinematography. Based on the 3D virtual stage, we propose a camera control framework using GAN that can adversarially learn to synthesize actor-driven camera movements compared to manual samples from human artists. In order to achieve fine-grained synchronization with the actor, our generator is designed in an encoder-decoder architecture, which transfers features extracted from body kinematics and emotion factor into camera-space trajectory. To enhance the representation of different emotional states, we further incorporate regularization to control the stylistic variance of the camera shake in the trajectory. When it comes to improving aesthetic frame compositions, a self-supervised adjustor is leveraged to modify the camera placements so that the on-frame locations of the actor comply with aesthetic rules based on projection. The experimental results show that our proposed method produces immersive cinematic videos of high quality, both quantitatively and qualitatively. Live examples are shown in the supplementary video (https://youtu.be/3OCZRjOoSpQ). More details can be found at https://arxiv.org/abs/2303.17041.