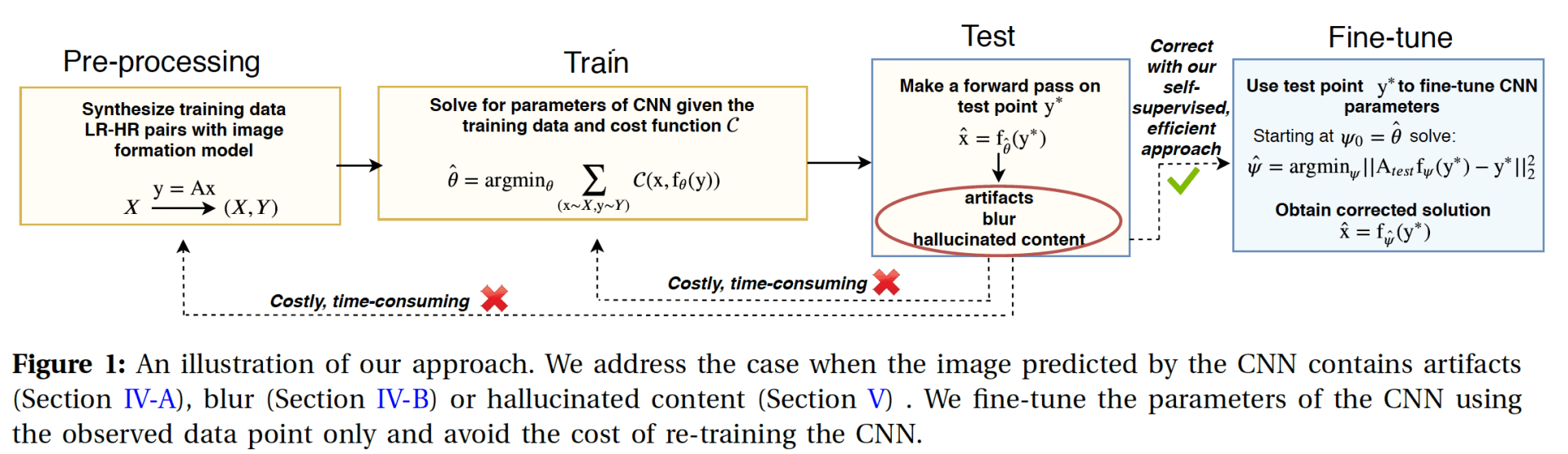

While Deep Neural Networks (DNNs) trained for image and video super-resolution regularly achieve new state-of-the-art performance, they also suffer from significant drawbacks. One of their limitations is their tendency to generate strong artifacts in their solution. This may occur when the low-resolution image formation model does not match that seen during training. Artifacts also regularly arise when training Generative Adversarial Networks for inverse imaging problems. In this project, we propose an efficient, fully self-supervised approach to remove the observed artifacts. At test time, given an image and its known image formation model, we fine-tune the parameters of the trained network and iteratively update them using a data consistency loss.

Given a CNN that was applied for the super-resolution problem of scale factor 4, we apply our method on multiple case scenarios:

- Testing the trained CNN on a test image that was downsampled by 2 as opposed to 4. Artifacts appear at the output of our CNN. Our method can successfully fine-tune our trained CNN such that artifacts are removed.

- Testing the CNN on a test image that has undergone additional blur in addition to downsampling. A blurry image will appear at the output. Our method can successfully fine-tune our trained CNN such that reduced blur is observed.

Our method is not limited to handling disagreements in training and testing forward models. Our fine-tuning method can also be used in the following cases:

- Reducing artifacts due to texture, feature and adversarial losses.

- Reducing artifacts due to sub-optimal training procedure.

Click here to check out our paper on arxiv: https://arxiv.org/pdf/1912.12879.pdf