1. Variational Deep Atmospheric Turbulence Correction for Video

We present a novel variational deep-learning approach for video atmospheric turbulence correction. We modify and tailor a Nonlinear Activation Free Network to video restoration. By including it in a variational inference framework, we boost the model’s performance and stability. This is achieved through conditioning the model on features extracted by a variational autoencoder (VAE). Furthermore, we enhance these features by making the encoder of the VAE include information pertinent to the image formation via a new loss based on the prediction of parameters of the geometrical distortion and the spatially variant blur responsible for the video sequence degradation. Experiments on a comprehensive synthetic video dataset demonstrate the effectiveness and reliability of the proposed method and validate its superiority compared to existing state-of-the-art approaches.

2. Atmospheric Turbulence Correction via Variational Deep Diffusion

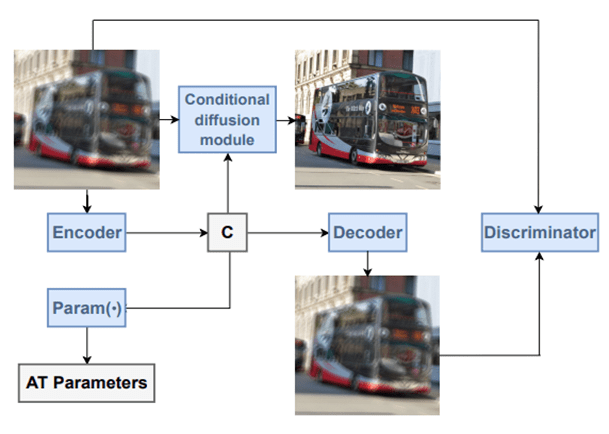

Atmospheric Turbulence (AT) correction is a challenging restoration task as it consists of two distortions: geometric distortion and spatially variant blur. If left unaddressed, these distortions can significantly impact downstream vision tasks. Diffusion models have shown impressive accomplishments in photo-realistic image synthesis and beyond. In this paper, we propose a novel deep conditional diffusion model under a variational inference framework to solve the AT correction problem. We use this framework to improve performance by learning latent prior information from the input and degradation processes. We use the learned information to further condition the diffusion model. Experiments are conducted in a comprehensive synthetic AT dataset. We show that the proposed framework achieves good quantitative and qualitative results.

Project Sponsor:

Office of the Director of National Intelligence (ODNI), Intelligence Advanced Research Projects Activity (IARPA).