My co-author Federico Bugni and I have been awarded the 2022 Arnold Zellner Award for the paper “Testing Continuity of a Density via g-order statistics in the Regression Discontinuity Design”, published in the Journal of Econometrics in 2021. The Zellner Award recognizes the best theoretical paper published by the Journal of Econometrics in a given year, following a selection process where associate editors and co-editors submit a list of nominations, and then an award committee comprised of fellows of the Journal of Econometrics selects the winner. The award was announced at the 50th Anniversary reception of the journal at the 2023 ASSA Annual Meeting in New Orleans, Louisiana.

My co-author Federico Bugni and I have been awarded the 2022 Arnold Zellner Award for the paper “Testing Continuity of a Density via g-order statistics in the Regression Discontinuity Design”, published in the Journal of Econometrics in 2021. The Zellner Award recognizes the best theoretical paper published by the Journal of Econometrics in a given year, following a selection process where associate editors and co-editors submit a list of nominations, and then an award committee comprised of fellows of the Journal of Econometrics selects the winner. The award was announced at the 50th Anniversary reception of the journal at the 2023 ASSA Annual Meeting in New Orleans, Louisiana.

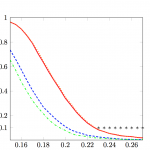

The winning paper proposes a novel test for the continuity of a density at a point based on the so-called g-order statistics. This testing problem is particularly relevant in the context of the regression discontinuity design (RDD), where it is a common practice to assess the credibility of the design by testing the continuity of the density of the so-called running variable at the cut-off point. The paper proposes a novel approximate sign test for this purpose, based on the simple intuition that, when the density of the running variable is continuous at the cut-off, the fraction of units under treatment and control local to the cut-off should be roughly the same. This means that the number of treated units out of the “q” observations closest to the cut-off, is approximately distributed as a binomial random variable with sample size “q” and probability 0.5. The approximate sign test has a number of distinctive attractive properties relative to existing methods used to test our null hypothesis of interest. First, the test does not require consistent non-parametric estimators of densities. Second, the test controls the limiting null rejection probability under fairly mild conditions that, in particular, do not require existence of derivatives of the density of the running variable. Third, the test is asymptotic validity under two alternative asymptotic frameworks that capture the fact that the fraction of useful observations close to the cut-off is quite small relative to the total sample size. In fact, the new test exhibits finite sample validity under stronger conditions than those needed for its asymptotic validity. Fourth, the test is simple to implement as it only involves computing order statistics, a constant critical value, and a single tuning parameter. This contrasts with existing alternatives that require kernel smoothing, local polynomials, bias correction, and under-smoothed bandwidth choices.

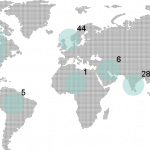

Cameron et al. (2008) provide simulations that suggest the wild bootstrap test works well even in settings with as few as five clusters, but existing theoretical analyses of its properties all rely on an asymptotic framework in which the number of clusters is “large.”

Cameron et al. (2008) provide simulations that suggest the wild bootstrap test works well even in settings with as few as five clusters, but existing theoretical analyses of its properties all rely on an asymptotic framework in which the number of clusters is “large.”

We have a new version of the paper

We have a new version of the paper